🧠 Reinforcement Learning

A reinforcement learning training workflow example based on the Isaac Gym simulation platform.

This guide covers environment setup, dependency installation, training and testing procedures, enabling users to build and validate motion control strategies for PND robots within a GPU-accelerated physics simulation environment.

🖥️ Hardware & System Requirements

Isaac Gym uses GPU-accelerated simulation. The recommended configuration is as follows:

| Item | Recommended Configuration |

|---|---|

| Operating System | ≥ Ubuntu 20.04 x86_64 (Mac/Windows not supported yet) |

| GPU | NVIDIA RTX Series (recommended ≥ 8GB VRAM) |

| Driver Version | ≥ 525 |

| Python Version | Python 3.8 |

| Supported Model | Adam Lite |

🌍 Environment & Dependencies

This section describes how to create the virtual environment required for running reinforcement learning examples, and how to install PyTorch, Isaac Gym, rsl_rl, and other dependencies.

1. Create a Virtual Environment (using Conda)

It is recommended to use Conda to create an isolated virtual environment to avoid dependency conflicts.

If Conda is already installed on your system, you may skip to 1.2 Create New Environment.

1.1 Install MiniConda (if not installed)

MiniConda is a lightweight version of Conda for quickly creating and managing Python environments.

mkdir -p ~/miniconda3

wget https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh -O ~/miniconda3/miniconda.sh

bash ~/miniconda3/miniconda.sh -b -u -p ~/miniconda3

rm ~/miniconda3/miniconda.sh

Initialize Conda:

1.2 Create New Environment

1.3 Activate Environment

2. Install PyTorch

PyTorch is used for neural network training and inference.

conda install pytorch==2.3.1 torchvision==0.18.1 torchaudio==2.3.1 pytorch-cuda=12.1 -c pytorch -c nvidia -y

3. Install Isaac Gym

Isaac Gym is NVIDIA’s GPU-accelerated physics simulation platform and is the core component of this training workflow.

3.1 Download & Install

Download Isaac Gym Preview 4 from the official website:

https://developer.nvidia.com/isaac-gym

Then extract the package and install from the python directory:

3.2 Verification

Run the sample demo:

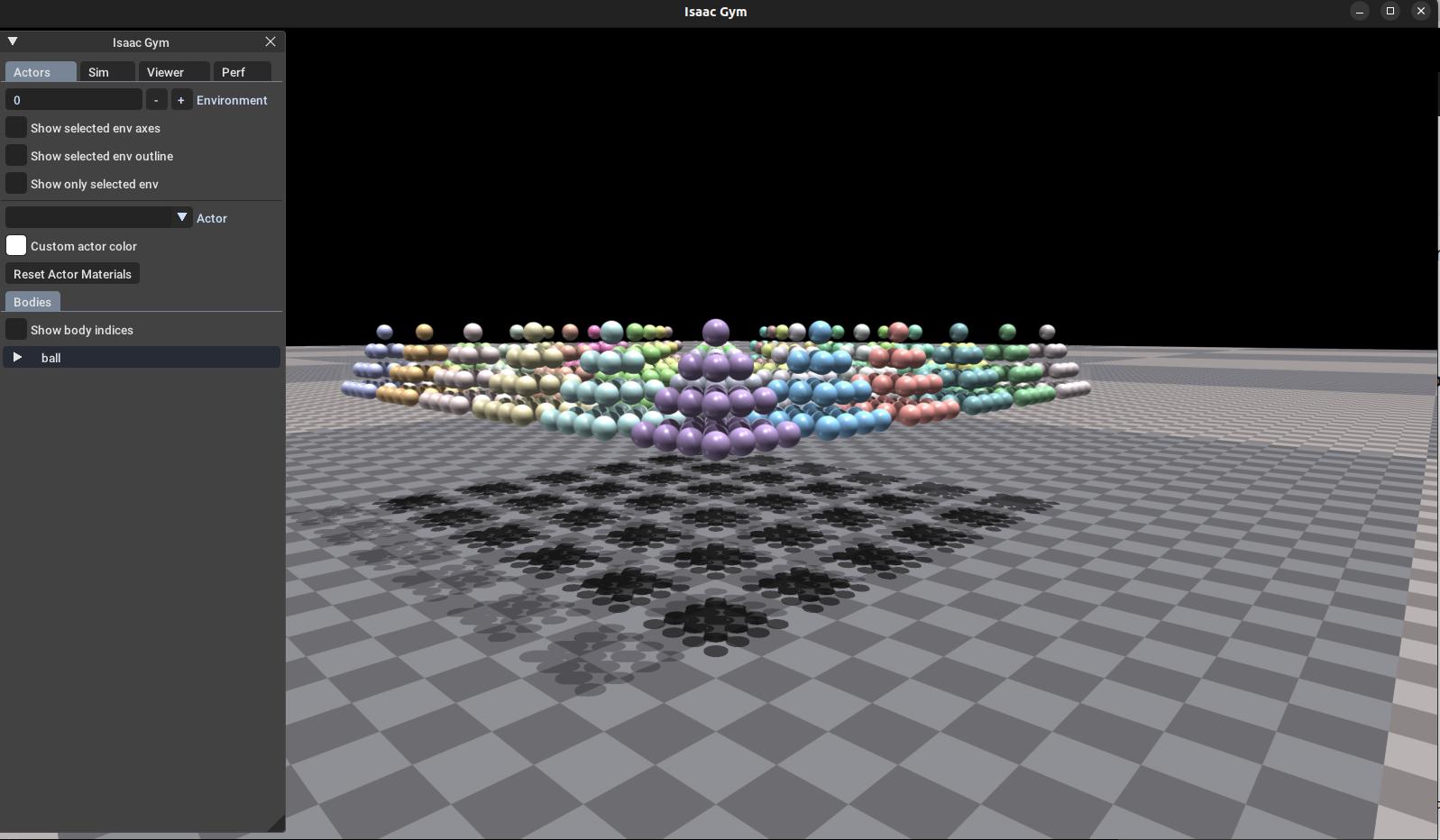

If the simulation window pops up and displays 1080 falling balls, the installation is successful.

If issues occur, refer to isaacgym/docs/index.html.

4. Install rsl_rl

rsl_rl is a reinforcement learning algorithm library provided by leggedrobotics (e.g., PPO).

git clone https://github.com/leggedrobotics/rsl_rl.git

cd rsl_rl

git checkout v1.0.2

pip install -e .

🏋️ Training Workflow

1. Download the Official PND Example Code

pnd_rl_gym is PND’s Reinforcement Learning training project, containing the environment, configurations, and training scripts.

1.1 Clone Repository

1.2 Install

2. Activate Environment

3. Start Training

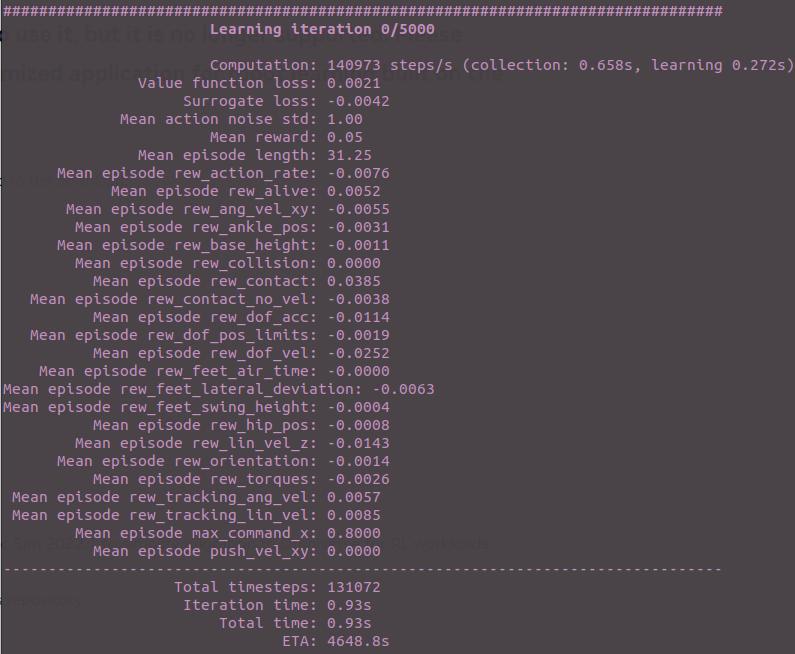

If the terminal prints logs similar to the above, training has started successfully.

4. Run Testing

📚 Acknowledgements

Last Updated: 2025-11-26